Comments by HGMuller

H. G. Muller wrote on Sun, Mar 10, 2024 04:13 PM UTC in reply to Kevin Pacey from 03:26 PM:

H. G. Muller wrote on Sun, Mar 10, 2024 04:13 PM UTC in reply to Kevin Pacey from 03:26 PM:Sure, methods can be wrong, and therefore have to be validated as well. This holds more for true computer studies using engines, than for selecting positions from a game database and counting those. The claim that piece A doesn't have a larger value B if good players cannot beat each other when they have A instead of B more often than not is not really a method. It is the definition of value. So counting the number of wins is by definition a good method. The only thing that might require validation is whether the person having applied this method is able to count. But there is a point where healthy skepsis becomes paranoia, and this seems far over the edge.

Extracting similar statistics from computer-generated games has much larger potential for being in error. Is the level of play good enough to produce realistic games? How sensitive are the result statistics to misconceptions that the engines might have had? It would be expected of someone publishing results from a new method to have investigated those issues. And the method applied to the orthodox pieces should of course reproduce the classical values.

For the self-play method to derive empirical piece values I have of course investigated all that before I started to trust any results. I played games with Pawn odds at many different time controls, as well as with some selected imbalances (such as BB-vs-NN) to see if the number of excess wins was the same fraction of the Pawn-odds advantage. (It was.) And whether the results for a B-N imbalance were different for using an engine that thought B>N as for using one that thought N>B. (They weren't.)

New methods don't become valid because more people apply them; if they all do the same wrong thing they will all confirm each other's faulty results. You validate them by recognizing their potential for error, and then test whether they suffer from this.

H. G. Muller wrote on Sun, Mar 10, 2024 04:18 PM UTC in reply to Jean-Louis Cazaux from 04:09 PM:

H. G. Muller wrote on Sun, Mar 10, 2024 04:18 PM UTC in reply to Jean-Louis Cazaux from 04:09 PM:I agree completely with Jean-Louis. There is a large difference between chess sets intended as tools for playing chess games, and sets intended for display by collectors. The latter are actually not chess sets at all; they are works of art inspired by chess.

(Images from the Chess-Piece Museum in Rotterdam.)

H. G. Muller wrote on Sun, Mar 10, 2024 09:34 PM UTC in reply to Kevin Pacey from 06:49 PM:

H. G. Muller wrote on Sun, Mar 10, 2024 09:34 PM UTC in reply to Kevin Pacey from 06:49 PM:Well, I looked up the exact numbers, and indeed hish threshold for including games in the Kaufman study was FM level (2300) for both players. That left him with 300,000 games out of an original 925,000. So what? Are FIDE masters in your eyes such poor players that the games they produce don't even vaguely resemble a serious chess game? And do you understand the consequences of such a claim being true? If B=N is only true for FIDE masters, and not for 2700+ super-GMs, then there is no such a thing as THE piece values; apparently they would depend on the level of play. There would not be any 'absolute truth'. So which value in that case would you think is more relevant for the readers of this website? The values that correctly predict who is closer to winning in games of players around 1900 Elo, or those for super-GMs?

Your method to cast doubt on the Kaufman study is tantamount to denying that pieces have a well-defined value in the first place. You don't seem to have much support in that area, though. Virtually all chess courses for beginners teach values that are very similar to the Kaufman values. I have never seen a book that says "Just start assuming all pieces are equally valuable for now, and when you have learned to win games that way, you will be ready to value the Queen a bit more". If players of around 1000 Elo would not be taught the 1:3:3:5:9 rule, they would probably never be able to acquire a higher rating.

The nice thing about computer studies is that you can actually test such issues. You can make the look-ahead so shallow and full with oversights that it does play at the level of a beginner, and still measure how much better or worse it does with a Knight instead of a Bishop. And how much the rating would suffer from using a certain set of erroneous piece values to guide its tactical decisions. And whether that is more or less than it would suffer when you improve the reliability of the search to make it a 1500 Elo player.

It would also be no problem at all to generate 300,000 games between 3000+ engines. It doesn't require slow time control to play at that level, as engines lose only little Elo when you make them move faster. (About 30 Elo per halving the time, so giving them 4 sec instead of an hour per game only takes some 300 points of their rating. So you can generate thousands of games per hour, and then just let the computer run for a week. This is how engines like Leela-Chess Zero train themselves. A recent Stockfish patch was accepted after 161,000 self-play games showed that it led to an improvement of 1 Elo...

And in contrast to what you believe, solving chess would not tell you anything about piece values. Solved chess positions (like we have if end-game tables if there are only a few pieces on the board) are evaluated by their distance to mate, irrespective of what material there is on the board. Piece values are a concept for estimating win probability in games of fallible players. With perfect play there is no probability, but a 100% certainty that the game-theoretical result will be reached. In perfect play there is no difference between drawn positions that are 'nearly won' or 'nearly lost'. Both are draws, and a perfect player cannot distinguish them without assuming there is some chance that the opponent will make an error. Then it becomes important if only a tiny error would bring him in a lost position, or that it needs a gross blunder or twenty small errors. And again, in perfect play there is no such thing as a small or a large error; all errors are equal, as they all cost 0.5 point, or they would not be errors at all.

So you don't seem to realize the importance of errors. The whole elo model is constructed on the assumption that players make (small) errors that weaken their position compared to the optimal move with an appreciable probablity, and only seldomly play the very best move. So that the advantage performs a random walk along the score scale. Statistical theory teaches us that the sum total of all these 'micro-errors' during the game has a Gaussian probability distribution by the time you reach the end, and that a difference in the average error/move rate implied by the ratings of the players determines how much luck the weaker player needs to overcome the systematic drift in favor of the stronger player, and consequently how often he would still manage to draw or win. Nearly equivalent pieces can only be assigned a different value because it requires a smaller error to blunder the draw away for the side with the weaker piece than it does for the side with the stronger piece. So that when the players tend to make equally large errors on average (i.e. are equally strong), it becomes less likely for the player with the strong piece to lose than for the player with the weak piece. Without the players making any error, the game would always stay a draw, and there would be no way to determine which piece was stronger.

H. G. Muller wrote on Sun, Mar 10, 2024 10:23 PM UTC in reply to Kevin Pacey from 10:02 PM:

H. G. Muller wrote on Sun, Mar 10, 2024 10:23 PM UTC in reply to Kevin Pacey from 10:02 PM:The problem is that an N vs B imbalance is so small that you would be in the draw zone, and if there aren't sufficiently many errors, or not a sufficiently large one, there wouldn't be any checkmate. A study like Kaufman's, where you analyze statistics in games starting from the FIDE start position, is no longer possible if the level of play gets too high. All 300,000 games would be draws, and most imbalances would not occur in any of the games, because they would be winning advantages, and the perfect players would never allow them to develop. Current top engines already suffer from this problem; the developers cannot determine what is an improvement, because the weakest version is already so good that it doesn't make sufficiently many or large errors to ever lose. If you play from the start position with balanced openings. You need a special book that only plays very poor opening lines, that bring one of the players on the brink of losing. Then it becomes interesting to see which version has the better chances to hold the draw or not.

High level of play is really detrimental for this kind of study, which is all about detecting how much error you need to swing the result.

And then there is still the problem that if it would make a difference, it is the high-level play that is utterly irrelevant to the readers here. No one here is 2700+ Elo. The only thing of interest here is whether the reader would do better with a Bishop or with a Knight.

H. G. Muller wrote on Mon, Mar 11, 2024 06:11 AM UTC in reply to Kevin Pacey from Sun Mar 10 10:29 PM:

H. G. Muller wrote on Mon, Mar 11, 2024 06:11 AM UTC in reply to Kevin Pacey from Sun Mar 10 10:29 PM:That is a completely wrong impression. After a short period since their creation, during which all commonly used features are implemented, the bugs have been ironed out, and the evaluation parameters have been tuned, further progress requires originality, and becomes very slow, typically in very small steps of 1-5 Elo. Alpha Zero was a unique revolution, using a completele different algorithm for finding moves, which up to that point had never been used, and was actually designed for playing Go. Using neural nets for evaluation in conventional engines (NNUE) was a somewhat smaller revolution, imported from Shogi, which typically causes an 80 Elo jump in strength for all engines that started to use it.

There are currently no ideas on how you could make quantum computers play chess. Quantum computers are not generally faster computers than those we have now. They are completely different beasts, being able to do some parallellizeable tasks very fast by doing them simultaneously. Using parallelism in chess has always been very problematic. I haven't exactly monitored progress in quantum computing, but I would be surprised if they could already multiply two large numbers.

By now it should be clear that the idea of using 2700+ games is a complete bust:

- it measures the wrong thing. We don't want piece values for super GM's, but for use in our own games.

- it does it in a very inefficient way, because of the high draw rate, and draws telling you nothing.

So even if you believe/would have proved piece values are independent of player strength, it would be very stupid to do the measurement at 2700+ level, taking 40 times as many games, each requiring 1000 times longer thinking than when you would have done it at the level you are aiming for. If you are smart you do exactly the opposit, measuring at the lowest level (= highest speed) you can afford without altering the results.

Oh, and to answer an earlier question I overlooked: I typically test for Elo-dependence of the results by playing some 800 games at each time control, varying the latter by a factor 10. 800 games gives a statistical error in the result of equivalent to some 10 Elo.

H. G. Muller wrote on Mon, Mar 11, 2024 02:37 PM UTC in reply to Bob Greenwade from 02:24 PM:

H. G. Muller wrote on Mon, Mar 11, 2024 02:37 PM UTC in reply to Bob Greenwade from 02:24 PM:It seems to me that it would be almost impossible to beat a player that is bent on a draw, and builds a double wall of Pawns to hide behind.

H. G. Muller wrote on Mon, Mar 11, 2024 03:56 PM UTC in reply to Bob Greenwade from 03:31 PM:

H. G. Muller wrote on Mon, Mar 11, 2024 03:56 PM UTC in reply to Bob Greenwade from 03:31 PM:I'd think that the other player's Pawns could whittle that down pretty effectively

I am not so sure, if the defender tries to avoid trading Pawns as much as possible, but instead pushes these forward when they get under attack. It only requires two interlocked Pawn chains of opposit color to make an impenetrable barrier.

H. G. Muller wrote on Mon, Mar 11, 2024 07:38 PM UTC in reply to Jean-Louis Cazaux from 07:20 PM:

H. G. Muller wrote on Mon, Mar 11, 2024 07:38 PM UTC in reply to Jean-Louis Cazaux from 07:20 PM:There is a dropdown to select the rendering method on the page where you can edit the preset.

H. G. Muller wrote on Mon, Mar 11, 2024 09:59 PM UTC in reply to Kevin Pacey from 07:11 PM:

H. G. Muller wrote on Mon, Mar 11, 2024 09:59 PM UTC in reply to Kevin Pacey from 07:11 PM:yet you're going right ahead yourself and saying 2700+ play is different

No, I said that if it was different the 2700+ result would not be of interest, while if they are the same it would be stupid to measure it at 2700+ while it is orders of magnitude easier around 2000. Whether less accurate play would give different results has to be tested. Below some level the games will no longer have any reality value, e.g. you could not expect correct values from a random mover.

So what you do is investigate how results for a few test cases depend on Time Control, starting at a TC where the engine plays at the level you are aiming for, and then reducing the time (and thus the level of play) to see where that starts to matter. With Fairy-Max as engine there turned out to be no change in results until the TC dropped below 40 moves/min. Examining the games also showed the cause of that: many games that could be easily won ended in draws because it was no longer searching deep enough to see that its passers could promote. So I conducted the tests at 40 moves/2min, where the play did not appear to suffer from any unnatural behavior.

You make it sound like it is my fault that you make so many false statements that need correcting...

Betza was actually write: the hand that wields the piece can have an effect on the empirical value. This is why I preferred to do the tests with Fairy-Max, which is basically a knowledge-less engine, which would treat all pieces on an equal basis. If you would use an engine that has advanced knowledge for, say, how to best position a piece w.r.t. the Pawn chain for some pieces and not for others, it would become an unfair comparison. ANd you can definitely make a piece worth less by encouraging very bad handling. E.g. if I would give a large positional bonus for having Knights in the corners, knights would become almost useless at low search depth. It would never use them. If you tell it a Queen is worth less than a Pawn, the side that starts with a Queen instead of a Rook would lose badly, as it would quickly trade Q for P and be R vs P behind.

The point is that the detrimental behavior that is encouraged here can never be stopped by the opponent. Small misconceptions tend to cancel out. E.g. if you twould have told the engine that a Bishop pair is worth less than a pair of Knights, the player with the Knights would avoid trading the Knights for Bishops, which is not much more difficult than avoiding the reverse trades, as the values are close. So it won't affect how often the imbalance will be traded away, and while it lasts, the Bishops will do more damage than the Knights, because the Bishop pair in truth is stronger. But there is no way you can prevent the opponent sacrificing his Queen for a Pawn, even if you have the misconception that the Pawn was worth more.

Note that large search depth tends to correct strategic misconceptions, because it brings the tactical consequences of strategic mistakes within the horizon. Wrecking your Pawn structure will eventually lead to forced loss of a Pawn, so the engine would avoid a wrecked Pawn structure even if it has no clue how to evaluate Pawn structures. Just because it doesn't want to lose a Pawn.

Statistical margins of error is high-school stuff. For N independent games the typical deviation of the result from the true probability will be square-root of N times the typical deviation of a single game result from the average. (Which is about 0.5, because not all games end in a 0 or 1 score.) So the typical deviation of the score percentage in a test of N games is 40%/sqrt(N). Having to calculate a square root isn't really advanced mathematics.

H. G. Muller wrote on Tue, Mar 12, 2024 08:15 AM UTC in reply to Jean-Louis Cazaux from 06:47 AM:

H. G. Muller wrote on Tue, Mar 12, 2024 08:15 AM UTC in reply to Jean-Louis Cazaux from 06:47 AM:

A pulldown is a display element you can click to make a short menu appear below it, from which you can then select an option.

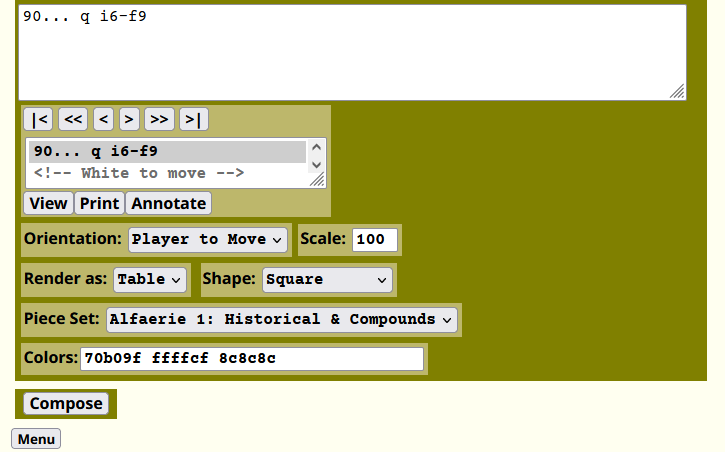

The one you need is labeled "Render as:", third from below on the left in the large greenish block. It doesn't seem to do anything, though.

There also is one in in the Edit page you get at by pressing the 'Menu' button, and selecting 'Edit' there:

This one worked for me if I press "Test" at the top of the page to get back to the Menu page, and then press Play there.

CSS rendering looks pretty ugly, though: all occupied squares get (1px wide black) borders, the rest of the board not.

H. G. Muller wrote on Tue, Mar 12, 2024 11:29 AM UTC in reply to Kevin Pacey from 12:26 AM:

H. G. Muller wrote on Tue, Mar 12, 2024 11:29 AM UTC in reply to Kevin Pacey from 12:26 AM:Systematic errors can never be estimated. There is no limit to how inaccurate a method of measurement can be. The only recourse is to be sure you design the method as good as you can. But what you mention is a statistical error, not a systematic one. Of course the weaker side can win, in any match with a finite number of games, by a fluke. Conventional statistics tells you how large the probability for that is. The probability to be off by 2 standard deviations or more (in either direction) is about 5%. To be off by 3 about 0.27%. It quickly tails off, but to make the standard deviation twice smaller you need 4 times as many games.

So it depends on how much weaker the weak side is. To demonstrate with only a one-in-a-million probablity for a fluke that a Queen is stronger than a Pawn wouldn't require very many games. The 20-0 result that you would almost certainly get would only have a one-in-a-million probability when the Queen was not better, but equal. OTOH, to show that a certain material imbalance provides a 1% better result with 95% 'confidence' (i.e. only 5% chance it is a fluke), you will need 6400 games (40%/sqrt(6400) = 40%/80 = 0.5%, so a 51% outcome is two standard deviations away from equality).

My aim is usually to determine piece values with a standard deviation of about 0.1 Pawn. Since Pawn odds typically causes a 65-70% result, 0.1 Pawn would result in 1.5-2% excess score, and 400-700 games would achieve that (40%/sqrt(400) = 2%). I consider it questionable whether it makes sense to strive for more accurate values, because piece values in themselves are already averages over various material combinations, and the actual material that is present might affect them by more than 0.1 Pawn.

I am not sure what you want to say in your first paragraph. You still argue like there would be an 'absolute truth' in piece values. But there isn't. The only thing that is absolute is the distance to checkmate. Piece values are only a heuristic used by fallible players who cannot calculate far enough ahead to see the checkmate. (Together with other heuristics for judging positional aspects.) If the checkmate is beyond your horizon you go for the material you think is strongest (i.e. gives the best prospects for winning), and hope for the best. If material gain is beyond the horizon you go for the position with the current material that you consider best. Above a certain level of play piece values become meaningless, and positions will be judged by other criteria than what material is present. And below that level they cannot be 'absolute truth', because it is not the ultimate level.

I never claimed that statistics of computer-generated games provide uncontestable proof of piece values. But they provide evidence. If a program that human players rated around 2000 Elo have difficulty beating in orthodox Chess hardly does better with a Chancellor as Queen replacement than as with an Archbishop (say 54%), it seems very unlikely that the Archbishop would be two Pawns less valuable. As that same engine would have very little trouble to convert other uncontested 2-Pawn advantages (such as R vs N, or 2N+P vs R) to a 90% score. It would require pretty strong evidence to the contrary to dismiss that as irrelevant, plus an explanation for why the program systematically blundered that advantage away. But there doesn't seem to be any such evidence at all. That a high-rated player thinks it is different is not evidence, especially if the rating is only based on games where neither A nor C participate. That the average number of moves on an empty board of A is smaller than that of C is not evidence, as it was never proven that piece values only depend on average mobility. (And counter examples on which everyone would agree can easily be given.) That A is a compound of pieces that are known to be weaker than the pieces C is a compound of is no evidence, as it was never proven that the value of a piece is equal to the sum of its compounds. (The Queen is an accepted counter-example.)

As to the draw margin: I usually took that as 1.5 Pawn, but that is very close to 4/3, and my only reason to pick it was that it is somewhere between 1 and 2 Pawns. And advantage of 1 Pawn is often not enough, 2 usually is. But 'decisive' is a relative notion. At lower levels games with a two-Pawn advantage can still be lost. GMs would probably not stand much chance against Stockfish if they were allowed to start with a two-Pawn advantage. At high levels a Pawn advantage was reported by Kaufmann to be equivalent to a 200-Elo rating advantage.

H. G. Muller wrote on Tue, Mar 12, 2024 01:16 PM UTC in reply to Kevin Pacey from 11:44 AM:

H. G. Muller wrote on Tue, Mar 12, 2024 01:16 PM UTC in reply to Kevin Pacey from 11:44 AM:"Well-defined value" was used there in the sence of "universally valid for everyone that uses them". (Which does not exclude that there are people that do not use them at all, because they have better means for judging positions. Stockfish no longer uses piece values... It evaluates positions entirely through means of a trained neural net.) If that would be the case, it would not be of any special interest to specifically investigate their value for high-rated players; any reasonable player would do. I already said it was not clear to me what exactly you wanted to say there, but I perceive this interest in high ratings as somewhat inconsistent. Either it would be the same as always, and thus not specially interesting, or the piece values would not be universal but dependent on rating, and the whole issue of piece values would not be very relevant. It seems there is no gain either way, so why bother?

H. G. Muller wrote on Tue, Mar 12, 2024 02:24 PM UTC in reply to Fergus Duniho from 01:18 PM:

H. G. Muller wrote on Tue, Mar 12, 2024 02:24 PM UTC in reply to Fergus Duniho from 01:18 PM:That should not be happening, and I have not seen it. Where are you seeing this behavior?

I tried this preset from the log page, clicked Menu, then Edit, changed the "Render as" to CSS code, and finally pressed Test. That gave me this:

H. G. Muller wrote on Tue, Mar 12, 2024 02:39 PM UTC in reply to Kevin Pacey from 12:00 PM:

H. G. Muller wrote on Tue, Mar 12, 2024 02:39 PM UTC in reply to Kevin Pacey from 12:00 PM:Well, I don't read it that way. I don't even quote a precise value, I only say "more like a Rook [than like the Bishop]". That is a pretty wide margin. Of course, the larger the margin, the more undeniable the statement. I don't think that anyone would dare to deny that an FAD or WAD has a value between that of a Pawn and a Queen, even without any study.

So yes, if computer tests show that FA is very similar in value to a Bishop, and FAD similar to a Rook, the statement that FAD is far more valuable than a Bishop seems to have a large-enough safety margin to bet your life on it. Even without computer testing it should be pretty obvious; the FA and N are very similar in mobility and forwardness, and the value of N and B are well known to be very close. Having 50% more moves should count for something, methinks. So the reason I sound very confident in that posting is not only because of computer studies, but is also based on prevailing player opinion and logic reasoning, which leads to a similar conclusion.

My objection there mainly concerned that 'thinks' could also refer to just a suspicion, and is not the correct term for describing an observation. In a sense no thinking at all goes into a computer study. You just let the computers play, and take notice of the resulting score.

H. G. Muller wrote on Tue, Mar 12, 2024 06:23 PM UTC in reply to Kevin Pacey from 05:43 PM:

H. G. Muller wrote on Tue, Mar 12, 2024 06:23 PM UTC in reply to Kevin Pacey from 05:43 PM:Well, the values that Kaufman found were B=N=3.25, R=5 and Q=9.75. So also there 2 minor > R+P (6.5 vs 6), minor > 3P (3.25 vs 3), 2R > Q (10 vs 9.75). Only 3 minor = Q. Except of course that this ignores the B-pair bonus; 3 minors is bound to involve at least one Bishop, and if that broke the pair... So in almost all cases 3 minors > Q.

You can also see the onset of the leveling effect in the Q-vs-3 case: it is not only bad in the presence of extra Bishops (making sure the Q is opposed by a pair), but also in the presence of extra Rooks. These Rooks would suffer much more from the presence of three opponent minors than they suffer from the presence of an opponent Queen. (But this of course transcends the simple theory of piece values.) So the conclusion would be that he only case where you have equality is Q vs NNB plus Pawns. This could very well be correct without being in contradiction with the claim that 2 minors are in general stronger.

BTW, in his article Kaufman already is skeptical about the Q value he found, and said that he personally would prefer a value 9.50.

If you don't recognize teh B-pair as a separate term, then it is of course no miracle that you find the Bishop on average to be stronger. Because i a large part of the cases it will be part of a pair.

H. G. Muller wrote on Wed, Mar 13, 2024 06:51 AM UTC in reply to Kevin Pacey from Tue Mar 12 07:44 PM:

H. G. Muller wrote on Wed, Mar 13, 2024 06:51 AM UTC in reply to Kevin Pacey from Tue Mar 12 07:44 PM:The problem with Pawns is that they are severely area bound, so that not all Pawns are equivalent, and some of these 'sub-types' cooperate better than others. Bishops in principle suffer from this too, but one seldomly has those on equal shades. (But still: good Bishop and bad Bishop.) So you cannot speak of THE Pawn value; depending on the Pawn constellation it might vary from 0.5 (doubled Rook Pawn), to 2.5 (7th-rank passer).

Kaufman already remarked that a Bishop appears to be better than a Knight when pitted against a Rook, which means it must have been weaker in some other piece combinations to arrive at an equal overall average. But I think common lore has it that Knights are particularly bad if you have Pawns on both wings, or in general, Pawns that are spread out. By requiring that the extra Pawns are connected passers you would more or less ensure that: there must be other Pawns, because in a pure KRKNPP end-game the Rook has no winning chances at all.

Rules involving a Bishop, like Q=R+B+P are always problematic, because it depends on the presence of the other Bishop to complete the pair. And also here the leveling effect starts to kick in, although to a lesser extent than with Q vs 3 minors. But add two Chancellors and Archbishops, and Q < R+B. (So really Q+C+A < C+A+R+B).

📝H. G. Muller wrote on Wed, Mar 13, 2024 12:52 PM UTC in reply to Florin Lupusoru from Mon Mar 11 03:28 PM:

📝H. G. Muller wrote on Wed, Mar 13, 2024 12:52 PM UTC in reply to Florin Lupusoru from Mon Mar 11 03:28 PM:I now improved the new Diagram script for recognizing checkmates faster, and it now deals with the opening threats 1.Pj6 and 1.Pj6 SE11n 2.BGi6 at 2.5 ply. It also punishes the wrong defense 1.Pj6 o11?

This version uses marker symbols for indicating the promotion/deferral choice. What do you think?

📝H. G. Muller wrote on Wed, Mar 13, 2024 08:24 PM UTC in reply to A. M. DeWitt from Fri May 26 2023 05:53 PM:

📝H. G. Muller wrote on Wed, Mar 13, 2024 08:24 PM UTC in reply to A. M. DeWitt from Fri May 26 2023 05:53 PM:OK, how about this?

H. G. Muller wrote on Wed, Mar 13, 2024 08:49 PM UTC in reply to Fergus Duniho from Tue Mar 12 04:56 PM:

H. G. Muller wrote on Wed, Mar 13, 2024 08:49 PM UTC in reply to Fergus Duniho from Tue Mar 12 04:56 PM:Make the images 50x50 instead of 48x48. This will stop the need to pad these images when the squares are 50x50.

I think this would be the proper solution. Most Alfaerie images are 50x50; I have no idea how some (mainly of the orthodox pieces) came to be 48x48 or 49x49. I wouldn't know how to make a palette PNG image; I usually geenrate the AlfaeriePNG images with fen2.php from SVG images, and fen2.php is based on C code I took from XBoard, which uses a function to safe a bitmap as PNG file, described as a 'toy interface' that offers little or no control of the image properties. (But it works.)

When I have time I will re-render these pieces at the proper size.

📝H. G. Muller wrote on Thu, Mar 14, 2024 07:34 AM UTC in reply to A. M. DeWitt from 01:20 AM:

📝H. G. Muller wrote on Thu, Mar 14, 2024 07:34 AM UTC in reply to A. M. DeWitt from 01:20 AM:However, I did notice a bug that causes the promotion choices to replace pieces on the squares they are shown on if you select something other than a promotion choice.

OK, fixed that.

Finding the mate threats is also significantly sped up in this version, by the use of a mate-killer heuristic: once it finds a checkmate it searches the same sequence of its own moves first in order to refute alternative opponent moves. As in large variants almost all moves do the same (namely nothing to address the problem), that makes their refutation almost optimal, and partly compensates for the slowdown by lines with checks in them being searched deeper.

The search algorithm now extends the depth a full ply for the first check evasion it tries, (at any depth, not just at the horizon), and half a ply for alternative check evasions. After 1.j6 it now finds 1... SEn11 after 1.6, 3 of 4.6 sec at 1.5/2/2.5 ply. After 2.BGi6 it then finds 2... m11 in 12 or 33 sec (2/2.5 ply). After 1... o11? it finds VGn9! in 8.6 or 14 sec (2/2.5 ply). Not yet with a mate score, but because it sees the opponent has to sac its GG to push the mate over the horizon. At 3 ply it does get the mate score in 18.6 sec.

[Edit] Oh, and the Diagram had a bug. FD and +WB did not burn when capturing other FD, because there was one @ to few in their row of the capture matrix (for the empty square). Fixed that too.

H. G. Muller wrote on Thu, Mar 14, 2024 09:36 AM UTC in reply to Fergus Duniho from Wed Mar 13 10:12 PM:

H. G. Muller wrote on Thu, Mar 14, 2024 09:36 AM UTC in reply to Fergus Duniho from Wed Mar 13 10:12 PM:It appears almost all alfaerie GIF images are 50x50. Some (including the orthodox pieces) are 49x49. Elephants and their derivatives are even 53x50. I recall that I had once seen a 48x48 GIF too, but forgot which that was.

Almost all PNG images are 48x48, though. Only a few that I recently made (mostly compounds done by fen2.php) are 50x50. I produced many of the SVG from which these are derived, but I think Greg had a script that he used to 'bulk convert' the SVG to PNG. He must have used 48x48 in this script. Why he picked that size is unclear to me, as amongst the GIF images it is virtually non-existent.

I think it is undesirable that GIF and PNG images have different sizes. It should be our long-term goal to upgrade all images to the (anti-aliased) PNG, and having different sizes would obstruct that.

So what to do? Should I try to re-render all the alfaeriePNG at 50x50? I suppose I could make my own script for that, at least for everything that we have as SVG, and not compound or post-edited (to apply crosses and such)..

💡📝H. G. Muller wrote on Thu, Mar 14, 2024 12:56 PM UTC in reply to Bn Em from 11:56 AM:

💡📝H. G. Muller wrote on Thu, Mar 14, 2024 12:56 PM UTC in reply to Bn Em from 11:56 AM:The idea of a fixed move that can be described by a notation implies a board with regular tiling. Such a tiling can have other connectivity than the usual 8-neighbor square-cell topology, e.g. hexagons or triangles. But these could have their own, completely independent system of atoms and directions, reusing the available letters for their own purposes.

I have thought about supporting hexagonal boards in the Interactive Diagram. This could be done by representing the board as a table with a column width half the piece-mage size, and give every table cell a colspan="2", in a masonic pattern. That would distort the board to a parallellogram. A numerical parameter hex=N could then specify how large a triangle to cut off at the acute corners. The board could then be displayed without border lines and transparent square shade, so that a user-supplied whole-board image with hexagons could be put up as background for the entire table. This would purely be a layout issue; the rest of the I.D. would know nothing about it. In particular, the moves of the pieces would have to be described in the normal Betza notation as if the pieces were moving on the unslanted board. E.g. a hexagonal Rook would be flbrvvssQ.

About castlings: one can of course think up any sort of crazy move, and present it as a castling option. But in orthodox Chess castling exists to fulfil a real need, rather than the desire to also have a move that relocates two pieces at once. The point is to provide a way to get your King to safety without trapping your Rook, and without having to break the Pawn shield. Sandwiching a piece between your Rook and King doesn't seem to serve a real purpose. If the piece could easily leave you could have done that before castling, and if not than it is still semi-trapped.

A more sensible novel form of castling would be where multiple piece end up at the other side of the King. E.g. when the piece next to the Rook would be a Moa ([F-W]), conventional castling is not up to the task. You would need a castling that moves the Rook to f1, and the Moa to e1, upon castling to g1 on 8x8. Of course you could argue that this is a defect of the start position, and could better be solved by choosing a setup that had a jumping piece on b1/g1.

💡📝H. G. Muller wrote on Thu, Mar 14, 2024 04:07 PM UTC in reply to Fergus Duniho from 03:34 PM:

💡📝H. G. Muller wrote on Thu, Mar 14, 2024 04:07 PM UTC in reply to Fergus Duniho from 03:34 PM:Actually I agree that XBetza has become too obfuscated, and that it has grown that way because of its use as move definition in the Interactive Diagram, where there was demand for pieces with ever more complex moves. But the bracket notation for describing multi-leg moves is much more intuitive, and hardly any less intuitive than the original Betza notation was for single-leg moves.

But it should be kept in mind that the complex, hard-to-understand descriptions in practice hardly ever occur. The overwhelming majority of variants never gets any further than symmetric slider-leaper compounds, which are dead simple. Personally I think it is much easier to read or write BN rather than "bishop-knight compound' all the time. It is certainly moer easy for a computer to understand than colloquial English, and is not beyond the understanding of most people. (While programming languages are.) The reason Betza's funny notation has become so popular is mainly that although it potentially can be complex, the cases needed in practice are quite simple.

BTW, the Play-Test Applet already contains a Betza-to-English converter, even though it is not always perfect yet.

H. G. Muller wrote on Thu, Mar 14, 2024 06:31 PM UTC in reply to Fergus Duniho from 05:33 PM:

H. G. Muller wrote on Thu, Mar 14, 2024 06:31 PM UTC in reply to Fergus Duniho from 05:33 PM:I have rendered all images in the alferieSVG directory now as 50x50 PNG using fen2.php?s=50&p=..., in the directory /graphics.dir/alfaeriePNG50. They look like this

(The shell script I used for this is /graphics.dir/alfaeriePNG50/x, and then y to give them the desired filename.)

25 comments displayed

Permalink to the exact comments currently displayed.

I am not sure what you try to demonstrate with this example. Obviously something that has never been tested in any way, but just pulled out of the hat of the one who suggests it, should be considered of questionable value, and should be accompanied by a warning. As Dr. Nunn does, for untested opening lines. And as I do, for untested piece values. That is an entirely different situation than mistrusting someone who reports results of an elaborate investigation, just because he is the only one so far that has done such an investigation. It is the difference of someone being a murder suspect merely because he has no alibi, or having an eye witness that testifies under oath he saw him do it. That seems a pretty big difference. And we are talking here about publication of results that are in principle verifiable, as they were accompanied by a description of the method obtaining them, which others could repeat. That is like a murder in front of an audience, where you so far only had one of the spectators testify. I don't think that the police in that case would postpone the arrest until other witnesses were located and interviewed. But they would not arrest all the people that have no alibi.

And piece values are a lot like opening lines. It is trivial to propose them, as an educated guess, but completely non-obvious what would be the result of actually playing that opening line or using these piece values to guide your play. It is important to know if they are merely proposed as a possibilty, or whether evidence of any kind has been collected that they actually work.